baller2vec: A Multi-Entity Transformer For Multi-Agent Spatiotemporal Modeling

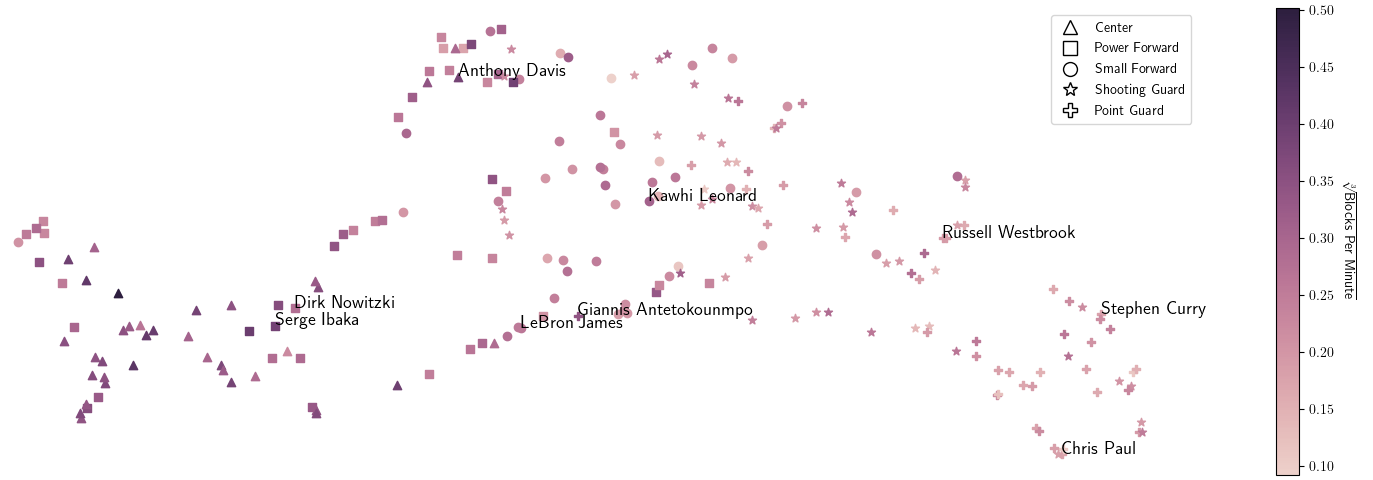

Multi-agent spatiotemporal modeling is a challenging task from both an algorithmic design and computational complexity perspective. Recent work has explored the efficacy of traditional deep sequential models in this domain, but these architectures are slow and cumbersome to train, particularly as model size increases. Further, prior attempts to model interactions between agents across time have limitations, such as imposing an order on the agents, or making assumptions about their relationships. In this paper, we introduce baller2vec, a multi-entity generalization of the standard Transformer that can, with minimal assumptions, simultaneously and efficiently integrate information across entities and time. We test the effectiveness of baller2vec for multi-agent spatiotemporal modeling by training it to perform two different basketball-related tasks: (1) simultaneously modeling the trajectories of all players on the court and (2) modeling the trajectory of the ball. Not only does baller2vec learn to perform these tasks well (outperforming a graph recurrent neural network with a similar number of parameters by a wide margin), it also appears to "understand" the game of basketball, encoding idiosyncratic qualities of players in its embeddings, and performing basketball-relevant functions with its attention heads.

PDF Abstract NeurIPS 2021 PDF NeurIPS 2021 Abstract