Synthesizing Optical and SAR Imagery From Land Cover Maps and Auxiliary Raster Data

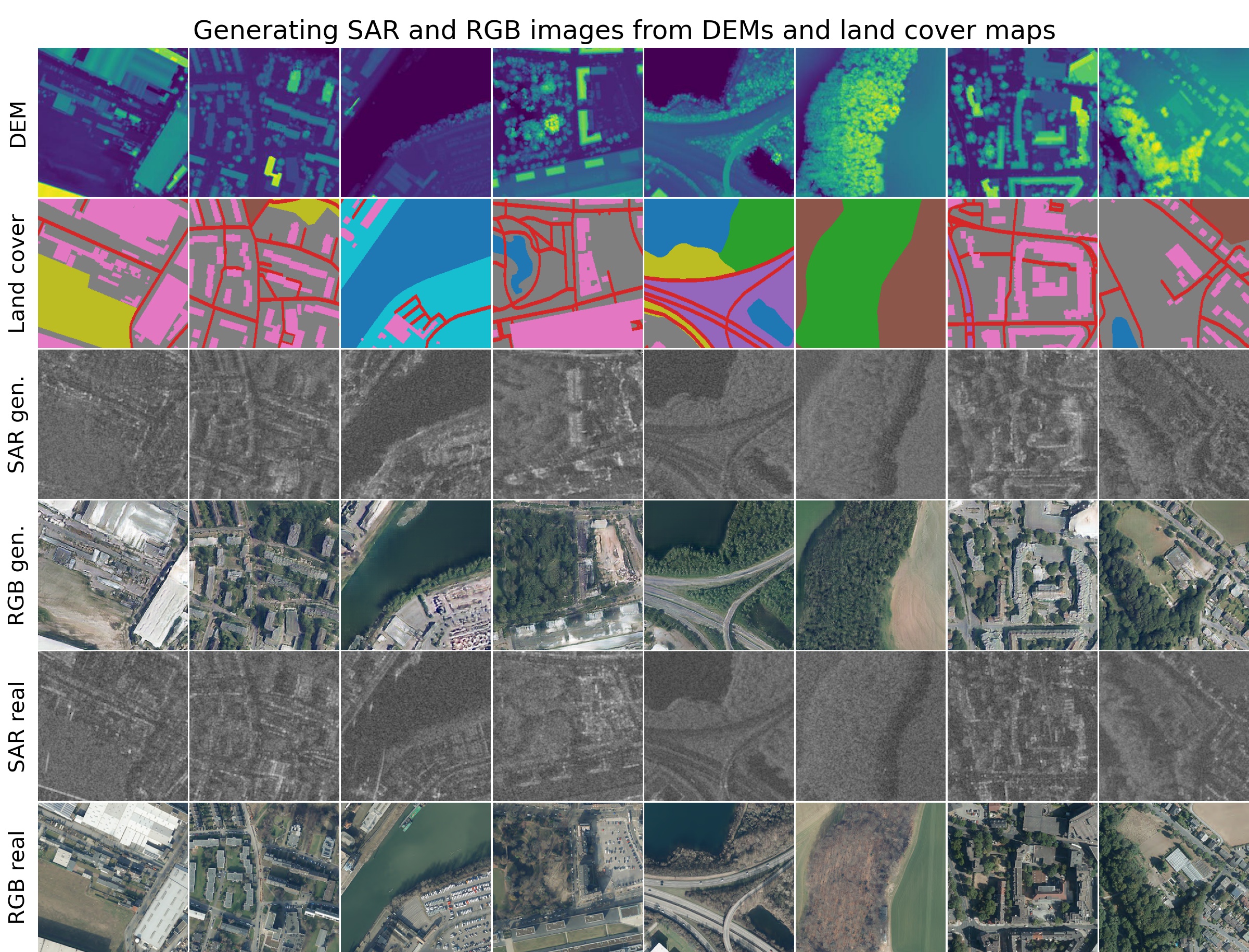

We synthesize both optical RGB and synthetic aperture radar (SAR) remote sensing images from land cover maps and auxiliary raster data using generative adversarial networks (GANs). In remote sensing, many types of data, such as digital elevation models (DEMs) or precipitation maps, are often not reflected in land cover maps but still influence image content or structure. Including such data in the synthesis process increases the quality of the generated images and exerts more control on their characteristics. Spatially adaptive normalization layers fuse both inputs and are applied to a full-blown generator architecture consisting of encoder and decoder to take full advantage of the information content in the auxiliary raster data. Our method successfully synthesizes medium (10 m) and high (1 m) resolution images when trained with the corresponding data set. We show the advantage of data fusion of land cover maps and auxiliary information using mean intersection over unions (mIoUs), pixel accuracy, and Fr\'echet inception distances (FIDs) using pretrained U-Net segmentation models. Handpicked images exemplify how fusing information avoids ambiguities in the synthesized images. By slightly editing the input, our method can be used to synthesize realistic changes, i.e., raising the water levels. The source code is available at https://github.com/gbaier/rs_img_synth and we published the newly created high-resolution dataset at https://ieee-dataport.org/open-access/geonrw.

PDF Abstract

ImageNet

ImageNet