Semantic RL with Action Grammars: Data-Efficient Learning of Hierarchical Task Abstractions

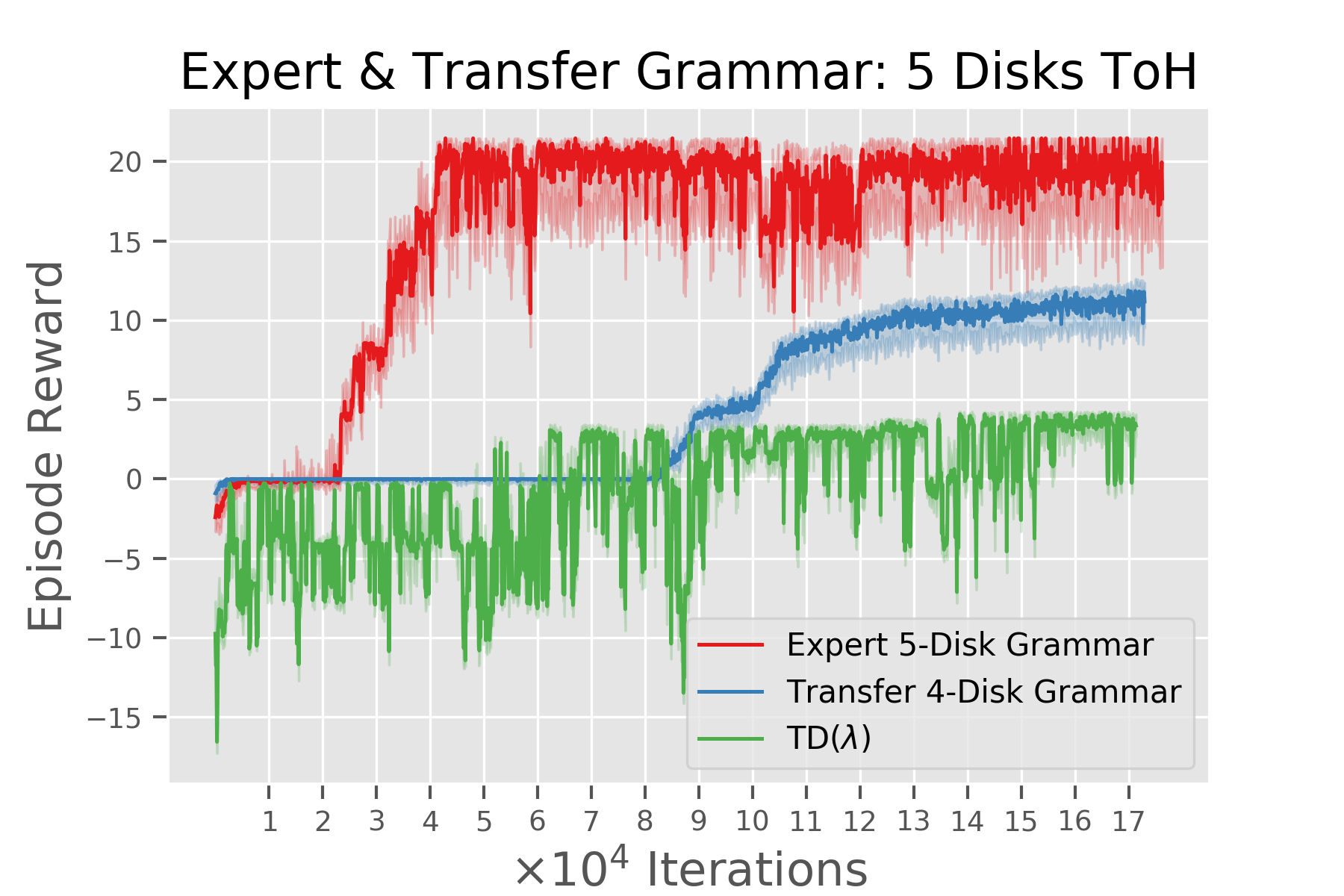

Hierarchical Reinforcement Learning algorithms have successfully been applied to temporal credit assignment problems with sparse reward signals. However, state-of-the-art algorithms require manual specification of sub-task structures, a sample inefficient exploration phase or lack semantic interpretability. Humans, on the other hand, efficiently detect hierarchical sub-structures induced by their surroundings. It has been argued that this inference process universally applies to language, logical reasoning as well as motor control. Therefore, we propose a cognitive-inspired Reinforcement Learning architecture which uses grammar induction to identify sub-goal policies. By treating an on-policy trajectory as a sentence sampled from the policy-conditioned language of the environment, we identify hierarchical constituents with the help of unsupervised grammatical inference. The resulting set of temporal abstractions is called action grammar (Pastra & Aloimonos, 2012) and unifies symbolic and connectionist approaches to Reinforcement Learning. It can be used to facilitate efficient imitation, transfer and online learning.

PDF Abstract