Modeling Affect-based Intrinsic Rewards for Exploration and Learning

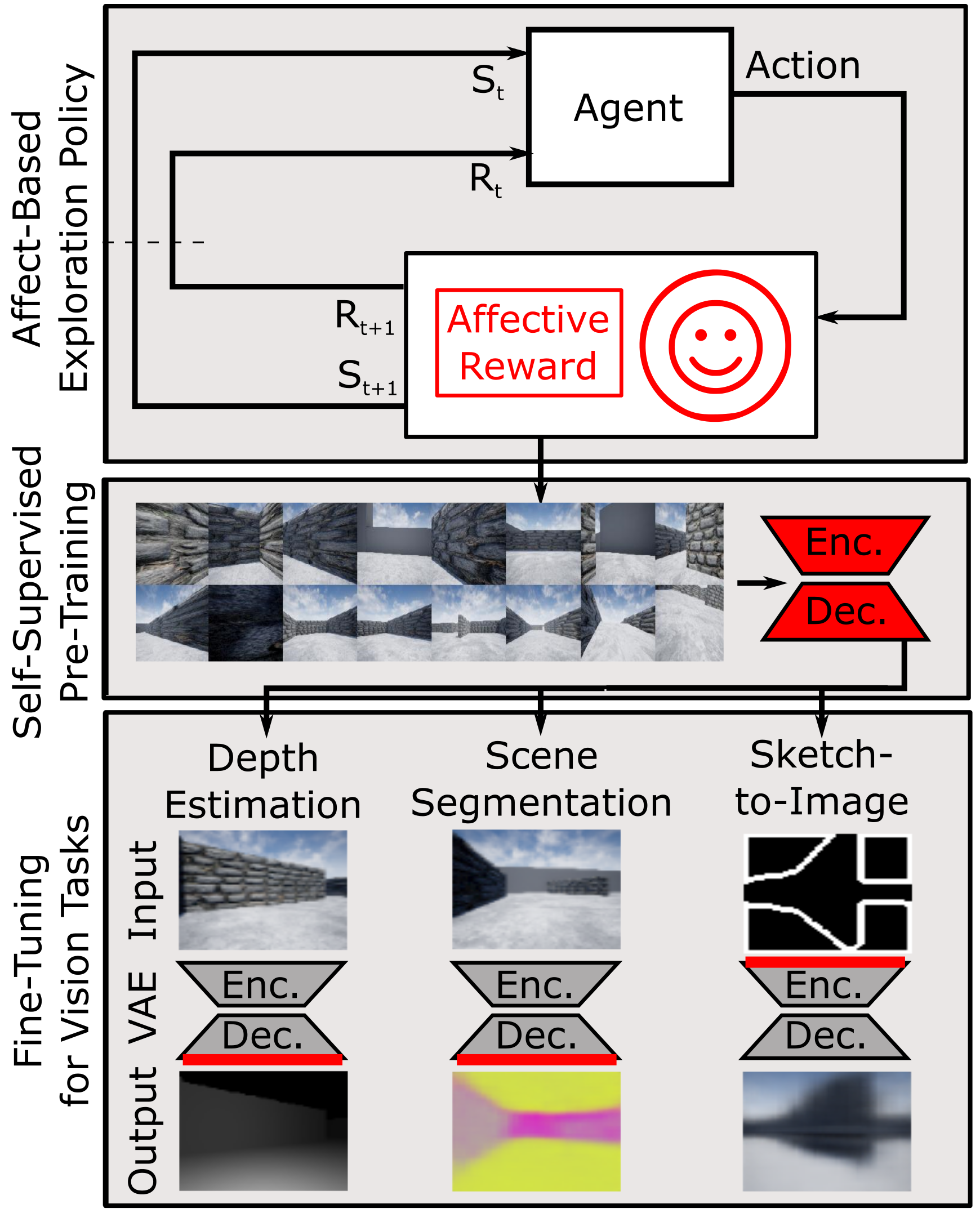

Positive affect has been linked to increased interest, curiosity and satisfaction in human learning. In reinforcement learning, extrinsic rewards are often sparse and difficult to define, intrinsically motivated learning can help address these challenges. We argue that positive affect is an important intrinsic reward that effectively helps drive exploration that is useful in gathering experiences. We present a novel approach leveraging a task-independent reward function trained on spontaneous smile behavior that reflects the intrinsic reward of positive affect. To evaluate our approach we trained several downstream computer vision tasks on data collected with our policy and several baseline methods. We show that the policy based on our affective rewards successfully increases the duration of episodes, the area explored and reduces collisions. The impact is the increased speed of learning for several downstream computer vision tasks.

PDF Abstract