Diffusion models for Handwriting Generation

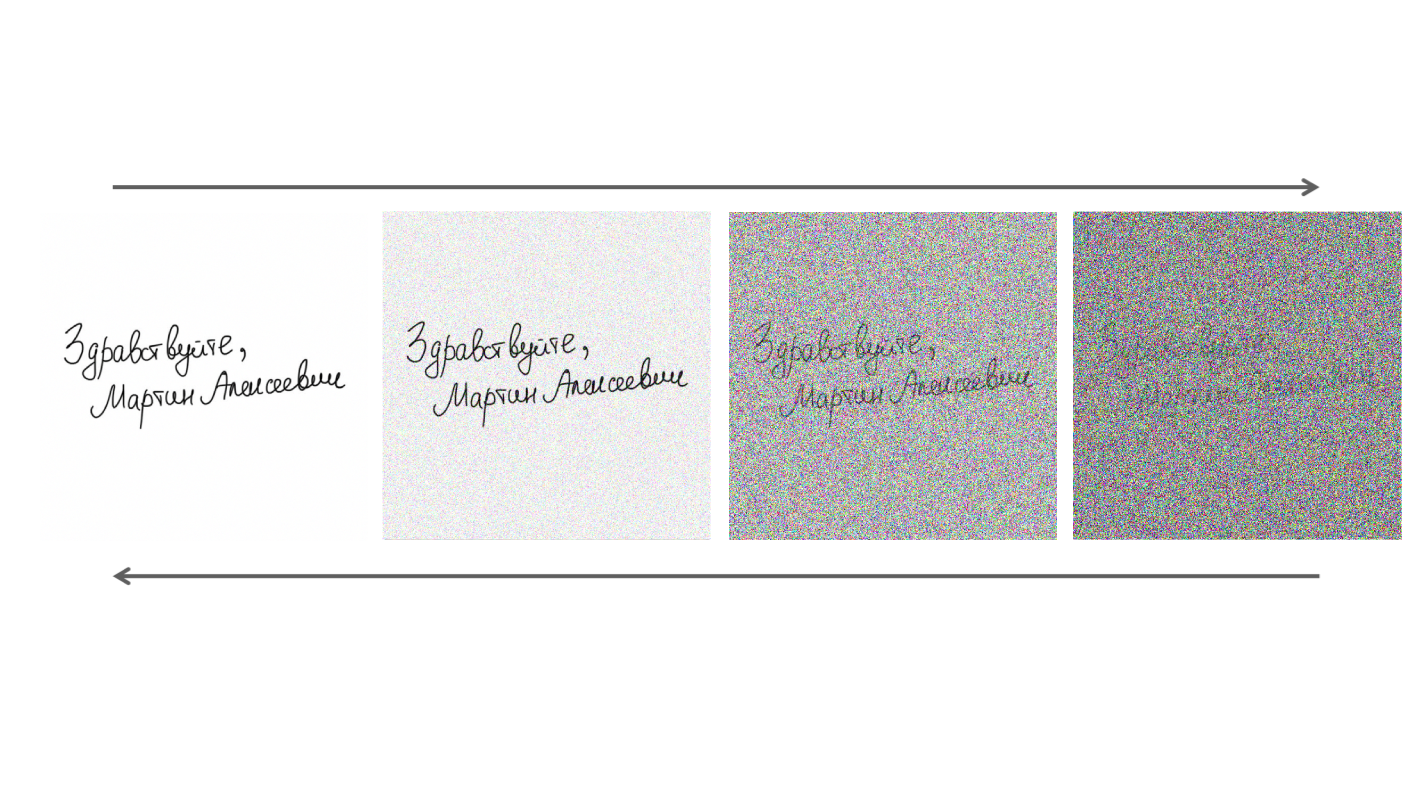

In this paper, we propose a diffusion probabilistic model for handwriting generation. Diffusion models are a class of generative models where samples start from Gaussian noise and are gradually denoised to produce output. Our method of handwriting generation does not require using any text-recognition based, writer-style based, or adversarial loss functions, nor does it require training of auxiliary networks. Our model is able to incorporate writer stylistic features directly from image data, eliminating the need for user interaction during sampling. Experiments reveal that our model is able to generate realistic , high quality images of handwritten text in a similar style to a given writer. Our implementation can be found at https://github.com/tcl9876/Diffusion-Handwriting-Generation

PDF Abstract