Dual Pyramid Generative Adversarial Networks for Semantic Image Synthesis

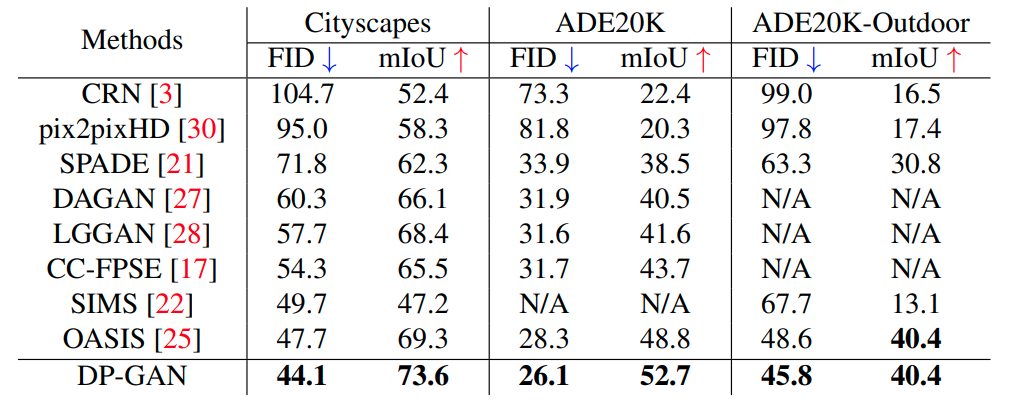

The goal of semantic image synthesis is to generate photo-realistic images from semantic label maps. It is highly relevant for tasks like content generation and image editing. Current state-of-the-art approaches, however, still struggle to generate realistic objects in images at various scales. In particular, small objects tend to fade away and large objects are often generated as collages of patches. In order to address this issue, we propose a Dual Pyramid Generative Adversarial Network (DP-GAN) that learns the conditioning of spatially-adaptive normalization blocks at all scales jointly, such that scale information is bi-directionally used, and it unifies supervision at different scales. Our qualitative and quantitative results show that the proposed approach generates images where small and large objects look more realistic compared to images generated by state-of-the-art methods.

PDF Abstract

Cityscapes

Cityscapes

ADE20K

ADE20K