Feature Likelihood Divergence: Evaluating the Generalization of Generative Models Using Samples

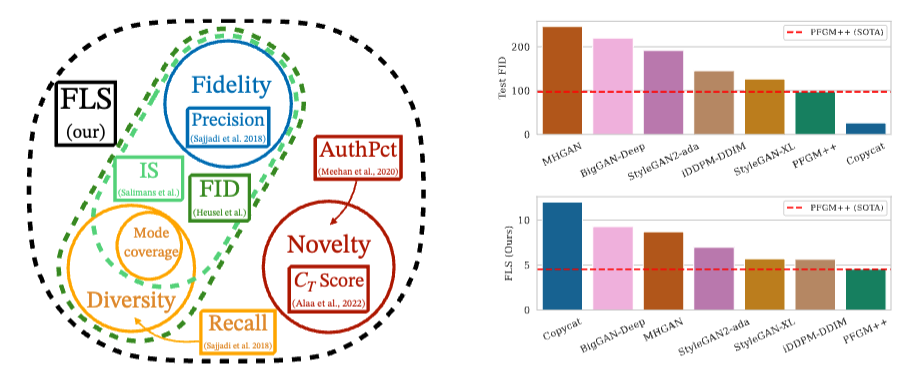

The past few years have seen impressive progress in the development of deep generative models capable of producing high-dimensional, complex, and photo-realistic data. However, current methods for evaluating such models remain incomplete: standard likelihood-based metrics do not always apply and rarely correlate with perceptual fidelity, while sample-based metrics, such as FID, are insensitive to overfitting, i.e., inability to generalize beyond the training set. To address these limitations, we propose a new metric called the Feature Likelihood Divergence (FLD), a parametric sample-based metric that uses density estimation to provide a comprehensive trichotomic evaluation accounting for novelty (i.e., different from the training samples), fidelity, and diversity of generated samples. We empirically demonstrate the ability of FLD to identify overfitting problem cases, even when previously proposed metrics fail. We also extensively evaluate FLD on various image datasets and model classes, demonstrating its ability to match intuitions of previous metrics like FID while offering a more comprehensive evaluation of generative models. Code is available at https://github.com/marcojira/fld.

PDF Abstract NeurIPS 2023 PDF NeurIPS 2023 Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet

FFHQ

FFHQ