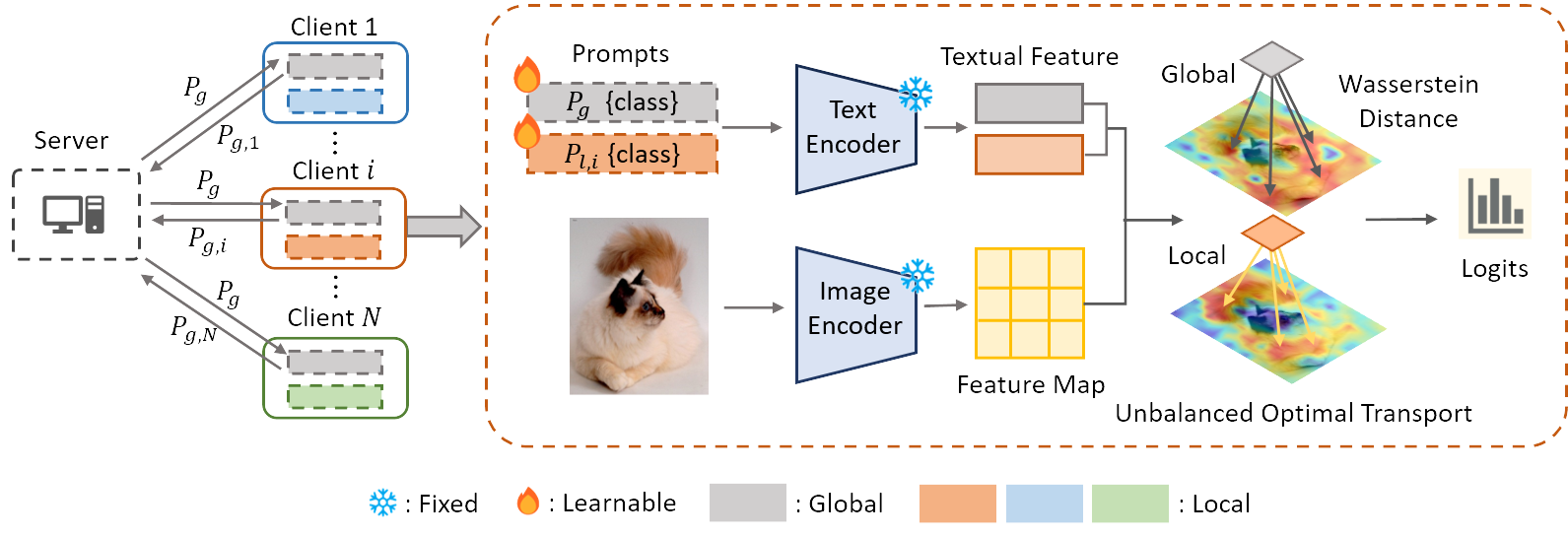

Global and Local Prompts Cooperation via Optimal Transport for Federated Learning

Prompt learning in pretrained visual-language models has shown remarkable flexibility across various downstream tasks. Leveraging its inherent lightweight nature, recent research attempted to integrate the powerful pretrained models into federated learning frameworks to simultaneously reduce communication costs and promote local training on insufficient data. Despite these efforts, current federated prompt learning methods lack specialized designs to systematically address severe data heterogeneities, e.g., data distribution with both label and feature shifts involved. To address this challenge, we present Federated Prompts Cooperation via Optimal Transport (FedOTP), which introduces efficient collaborative prompt learning strategies to capture diverse category traits on a per-client basis. Specifically, for each client, we learn a global prompt to extract consensus knowledge among clients, and a local prompt to capture client-specific category characteristics. Unbalanced Optimal Transport is then employed to align local visual features with these prompts, striking a balance between global consensus and local personalization. By relaxing one of the equality constraints, FedOTP enables prompts to focus solely on the core regions of image patches. Extensive experiments on datasets with various types of heterogeneities have demonstrated that our FedOTP outperforms the state-of-the-art methods.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

Oxford 102 Flower

Oxford 102 Flower

DTD

DTD

DomainNet

DomainNet

Food-101

Food-101

Caltech-101

Caltech-101