Learning Calibrated-Guidance for Object Detection in Aerial Images

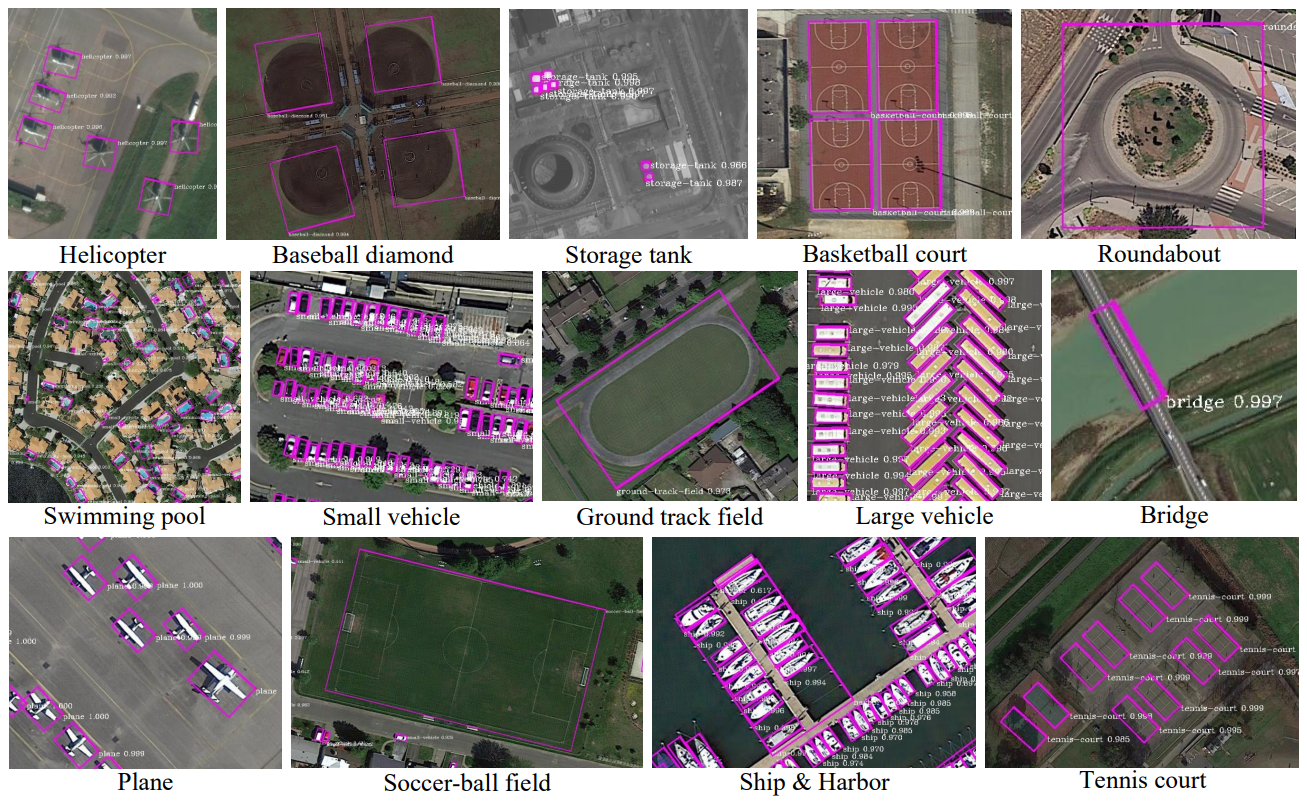

Object detection is one of the most fundamental yet challenging research topics in the domain of computer vision. Recently, the study on this topic in aerial images has made tremendous progress. However, complex background and worse imaging quality are obvious problems in aerial object detection. Most state-of-the-art approaches tend to develop elaborate attention mechanisms for the space-time feature calibrations with arduous computational complexity, while surprisingly ignoring the importance of feature calibrations in channel-wise. In this work, we propose a simple yet effective Calibrated-Guidance (CG) scheme to enhance channel communications in a feature transformer fashion, which can adaptively determine the calibration weights for each channel based on the global feature affinity correlations. Specifically, for a given set of feature maps, CG first computes the feature similarity between each channel and the remaining channels as the intermediary calibration guidance. Then, re-representing each channel by aggregating all the channels weighted together via the guidance operation. Our CG is a general module that can be plugged into any deep neural networks, which is named as CG-Net. To demonstrate its effectiveness and efficiency, extensive experiments are carried out on both oriented object detection task and horizontal object detection task in aerial images. Experimental results on two challenging benchmarks (DOTA and HRSC2016) demonstrate that our CG-Net can achieve the new state-of-the-art performance in accuracy with a fair computational overhead. The source code has been open sourced at https://github.com/WeiZongqi/CG-Net

PDF Abstract

DOTA

DOTA