Learning Permutations with Sinkhorn Policy Gradient

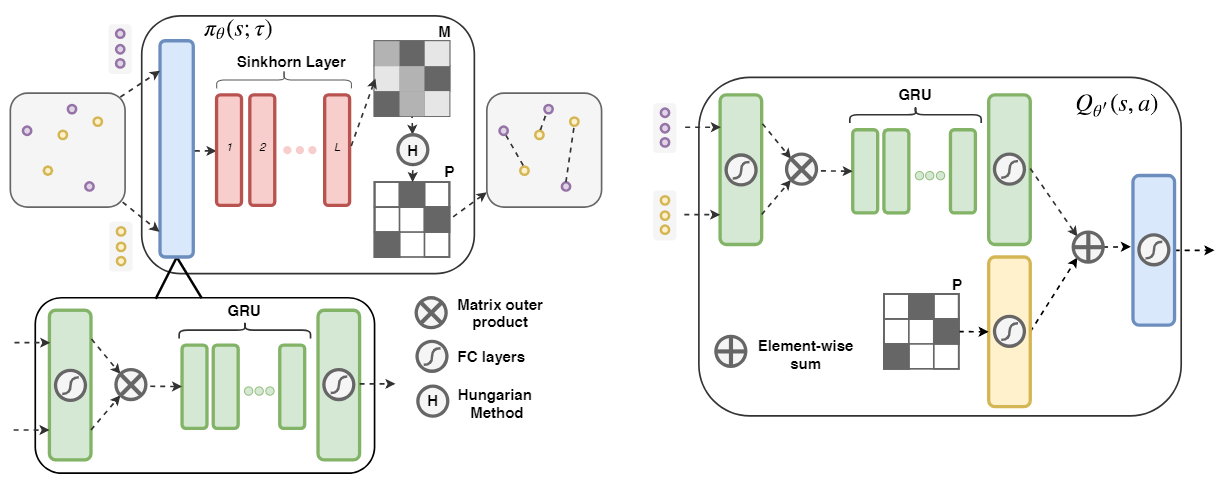

Many problems at the intersection of combinatorics and computer science require solving for a permutation that optimally matches, ranks, or sorts some data. These problems usually have a task-specific, often non-differentiable objective function that data-driven algorithms can use as a learning signal. In this paper, we propose the Sinkhorn Policy Gradient (SPG) algorithm for learning policies on permutation matrices. The actor-critic neural network architecture we introduce for SPG uniquely decouples representation learning of the state space from the highly-structured action space of permutations with a temperature-controlled Sinkhorn layer. The Sinkhorn layer produces continuous relaxations of permutation matrices so that the actor-critic architecture can be trained end-to-end. Our empirical results show that agents trained with SPG can perform competitively on sorting, the Euclidean TSP, and matching tasks. We also observe that SPG is significantly more data efficient at the matching task than the baseline methods, which indicates that SPG is conducive to learning representations that are useful for reasoning about permutations.

PDF Abstract