Proto-CLIP: Vision-Language Prototypical Network for Few-Shot Learning

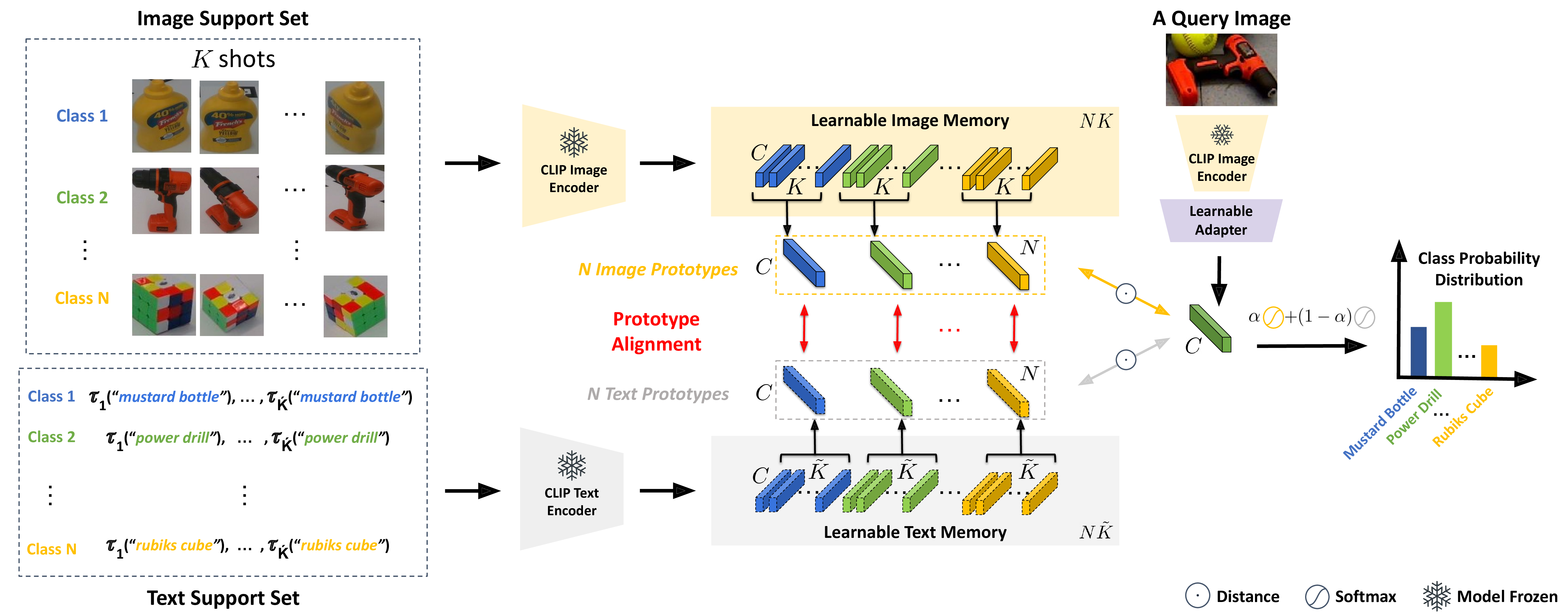

We propose a novel framework for few-shot learning by leveraging large-scale vision-language models such as CLIP. Motivated by the unimodal prototypical networks for few-shot learning, we introduce PROTO-CLIP that utilizes image prototypes and text prototypes for few-shot learning. Specifically, PROTO-CLIP adapts the image encoder and text encoder in CLIP in a joint fashion using few-shot examples. The two encoders are used to compute prototypes of image classes for classification. During adaptation, we propose aligning the image and text prototypes of corresponding classes. Such a proposed alignment is beneficial for few-shot classification due to the contributions from both types of prototypes. We demonstrate the effectiveness of our method by conducting experiments on benchmark datasets for few-shot learning as well as in the real world for robot perception.

PDF Abstract

ImageNet

ImageNet

UCF101

UCF101

Oxford 102 Flower

Oxford 102 Flower

Stanford Cars

Stanford Cars

DTD

DTD

Food-101

Food-101

Caltech-101

Caltech-101

EuroSAT

EuroSAT

FGVC-Aircraft

FGVC-Aircraft

Oxford-IIIT Pet Dataset

Oxford-IIIT Pet Dataset

FewSOL

FewSOL