TinyQMIX: Distributed Access Control for mMTC via Multi-agent Reinforcement Learning

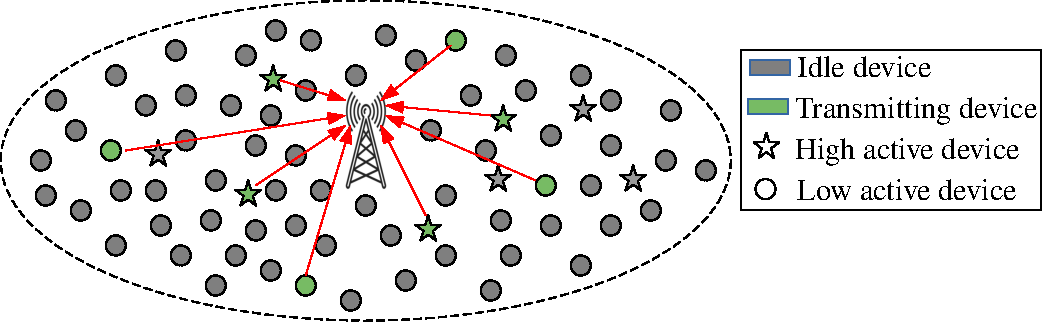

Distributed access control is a crucial component for massive machine type communication (mMTC). In this communication scenario, centralized resource allocation is not scalable because resource configurations have to be sent frequently from the base station to a massive number of devices. We investigate distributed reinforcement learning for resource selection without relying on centralized control. Another important feature of mMTC is the sporadic and dynamic change of traffic. Existing studies on distributed access control assume that traffic load is static or they are able to gradually adapt to the dynamic traffic. We minimize the adaptation period by training TinyQMIX, which is a lightweight multi-agent deep reinforcement learning model, to learn a distributed wireless resource selection policy under various traffic patterns before deployment. Therefore, the trained agents are able to quickly adapt to dynamic traffic and provide low access delay. Numerical results are presented to support our claims.

PDF Abstract