Prompted Contextual Transformer for Incomplete-View CT Reconstruction

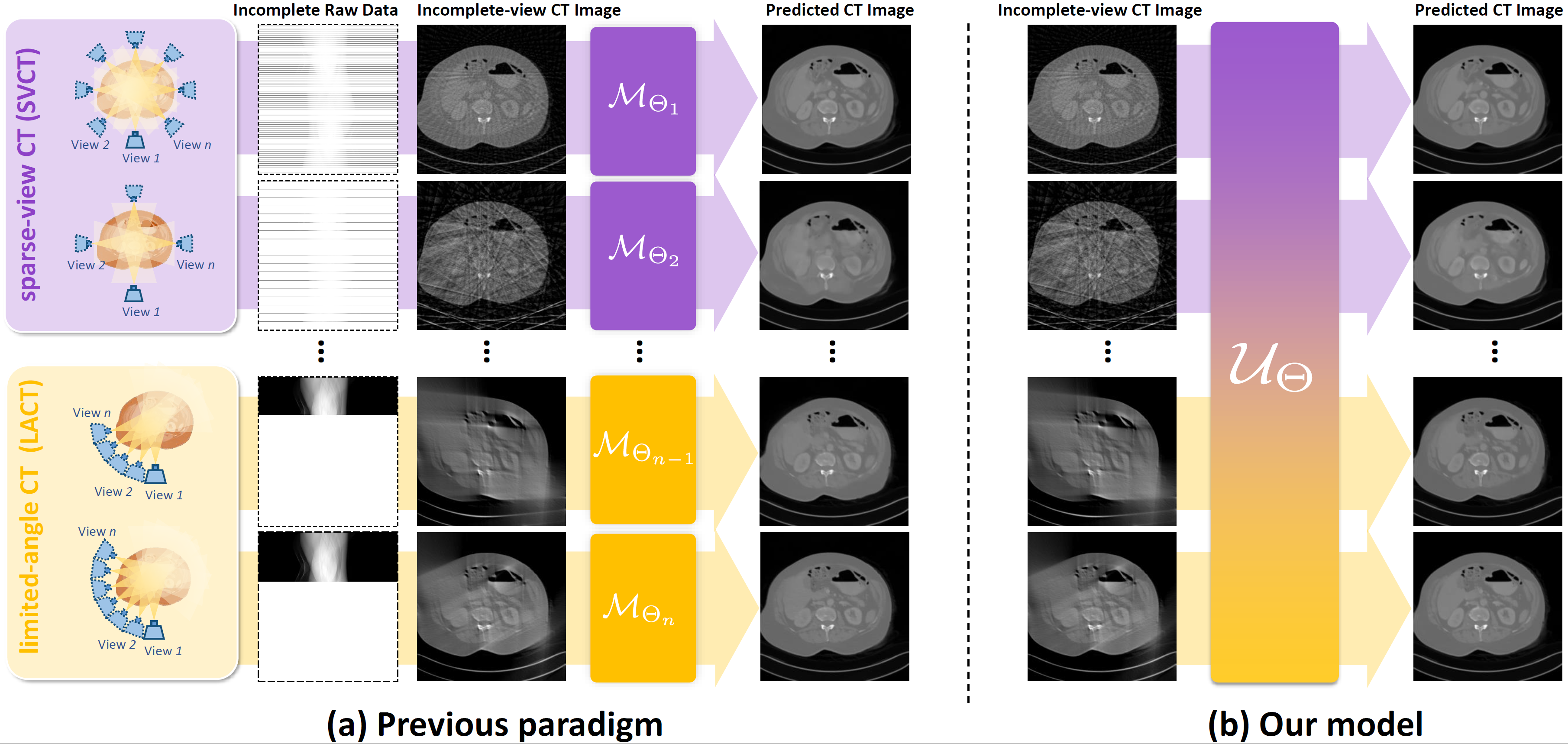

Incomplete-view computed tomography (CT) can shorten the data acquisition time and allow scanning of large objects, including sparse-view and limited-angle scenarios, each with various settings, such as different view numbers or angular ranges. However, the reconstructed images present severe, varying artifacts due to different missing projection data patterns. Existing methods tackle these scenarios/settings separately and individually, which are cumbersome and lack the flexibility to adapt to new settings. To enjoy the multi-setting synergy in a single model, we propose a novel Prompted Contextual Transformer (ProCT) for incomplete-view CT reconstruction. The novelties of ProCT lie in two folds. First, we devise a projection view-aware prompting to provide setting-discriminative information, enabling a single ProCT to handle diverse incomplete-view CT settings. Second, we propose artifact-aware contextual learning to sense artifact pattern knowledge from in-context image pairs, making ProCT capable of accurately removing the complex, unseen artifacts. Extensive experimental results on two publicly available clinical CT datasets demonstrate the superior performance of ProCT over state-of-the-art methods -- including single-setting models -- on a wide range of incomplete-view CT settings, strong transferability to unseen datasets and scenarios, and improved performance when sinogram data is available. The code is available at: https://github.com/Masaaki-75/proct

PDF Abstract